The boom in artificial intelligence has had such a profound impact on the massive technology firms that their energy consumption and hence their CO2 emissions, have increased.

The spectacular success of huge language models akin to ChatGPT has contributed to this increase in energy requirements. At 2.9 watt-hours per ChatGPT query, AI queries require about 10 times as much energy as traditional Google searchesbased on the Electric Power Research Institute, a nonprofit research firm. New AI functions akin to audio and video generation are prone to increase these energy requirements.

The energy requirements of AI are changing the best way energy firms calculate their costs. They are actually exploring previously untenable options, akin to Restarting a nuclear reactor on the Three Mile Island power plant, which has been in infamous disaster in 1979.

Data centers have experienced continuous growth for many years, but the size of growth within the still-young era of huge language models has been extraordinary. AI requires far more computing and data storage resources when data center growth was possible before AI.

AI and the ability grid

Thanks to AI, the ability grid – in lots of places already at capability or vulnerable to stability problems – is under greater pressure than before. There can also be a big lag between computer growth and network growth. Building data centers takes one to 2 years, while feeding recent energy into the network requires over 4 years.

According to a recent report by the Electric Power Research Institute, only 15 states have comprise 80% of knowledge centers within the USASome states – like Virginia, home of Data Center Alley – amazingly, data centers devour over 25% of their electricity. Other parts of the world are seeing similar trends in the expansion of cluster data centers. Ireland, for instance, has Become a knowledge center nation.

In addition to the necessity to increase electricity generation to sustain this growth, just about all countries have decarbonization targets. This signifies that they strive to feed more renewable energy sources into the gridRenewable energies akin to wind and solar are irregular: the wind doesn’t at all times blow and the sun doesn’t at all times shine. Lack of low cost, green and scalable energy storage signifies that the grid faces a good greater problem in balancing supply and demand.

Further challenges to the expansion of knowledge centers are Increasing use of water cooling to extend efficiencywhich puts a strain on the limited freshwater sources. As a result, some communities Push back against recent investments in data centers.

Better technology

The industry is addressing this energy crisis in several ways. First, computer hardware far more energy efficient through the years by way of operations performed per watt consumed. Data center energy efficiency, a metric that shows the ratio of power consumption for computers in comparison with cooling and other infrastructure, has been reduced to 1.5 on averageand in modern facilities even to a formidable 1.2. New data centers have more efficient cooling through water cooling and external cooling air where available.

Unfortunately, efficiency alone won’t solve the sustainability problem. Jevons Paradox shows how efficiency results in Increase in energy consumption in the long run. In addition, efficiency gains in hardware significantly slowed downbecause the industry has reached the bounds of scaling chip technology.

To further improve efficiency, researchers are developing Special hardware akin to acceleratorsrecent integration technologies akin to 3D chipsand recent Chip cooling Techniques.

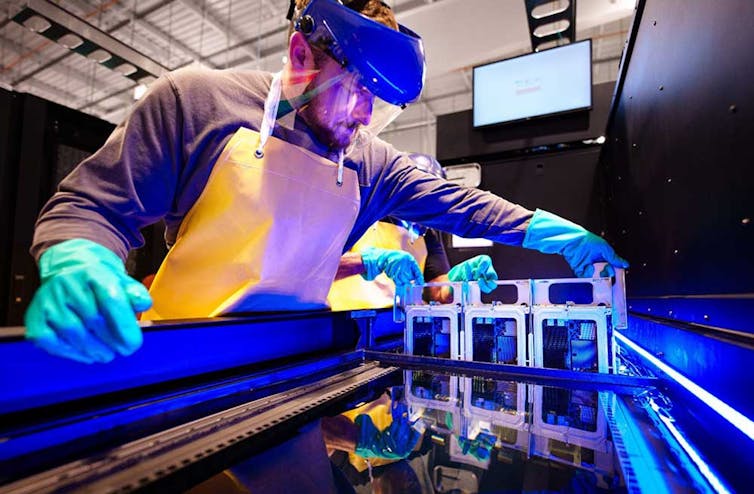

Researchers are also increasingly investigating and developing Cooling technologies for data centersThe report by the Electric Power Research Institute confirms recent cooling methodsakin to air-assisted liquid cooling and immersion cooling. While liquid cooling has already found its way into data centers, few recent data centers have implemented immersion cooling, which continues to be under development.

Craig Fritz, Sandia National Laboratories

Flexible future

A brand new option to construct AI data centers is flexible computing. The basic idea is to do more computing when electricity is cheaper, more available, and more environmentally friendly, and fewer when it’s costlier, scarce, and more polluting.

Data center operators can upgrade their systems to supply a versatile load on the network. Science And Industry have provided early examples of knowledge center demand response, where data centers regulate their power based on the needs of the ability grid. For example, they will schedule certain computing tasks for times of lower utilization.

Achieving broader and greater flexibility in power consumption requires innovations in hardware, software, and network-data center coordination. For AI particularly, there may be a variety of scope for developing recent strategies to optimize the computational load and thus the energy consumption of knowledge centers. For example, data centers can reduce the accuracy to cut back the workload when training AI models.

Achieving this vision requires higher modeling and forecasting. Data centers can try to raised understand and predict their loads and conditions. It can also be essential to predict network load and growth.

The Electric Power Research Institute Load forecasting initiative includes activities to support network planning and operations. Comprehensive monitoring and intelligent analytics – possibly based on artificial intelligence – for each data centers and the network are essential for accurate forecasting.

On the sting

The U.S. is at a critical juncture given the explosive growth of AI. It is immensely difficult to suit a whole bunch of megawatts of power demand into already overburdened grids. It could also be time to rethink the best way the industry builds data centers.

One way is to sustainably construct more edge data centers—smaller, more widely distributed facilities—to bring computing to local communities. Edge data centers also can reliably add computing power to densely populated, urban regions without putting additional strain on the grid. While these smaller centers currently account for 10% of knowledge centers within the U.S., analysts predict that the marketplace for smaller edge data centers will grow by over 20% in the following five years.

In addition to remodeling data centers into flexible and controllable loads, innovations in the sting data center space may help make AI's energy needs significantly more sustainable.

image credit : theconversation.com

Leave a Reply