My colleagues and me At Purdue University, the human values embedded in AI systems have uncovered a major imbalance. The systems were predominantly geared towards information and usefulness values and fewer for prosocial, well-being and bourgeois values.

In the middle of many AI systems there are large collections of images, text and other data forms used to coach models. While these data records are meticulously curated, it will not be unusual for them to sometimes contain unethical or forbidden content.

To be certain that AI systems don’t use harmful content when response to users, the researchers brought a way called Learn learning from human feedback. Researchers use highly curated data records with human preferences to form the behavior of AI systems with a purpose to be helpful and honest.

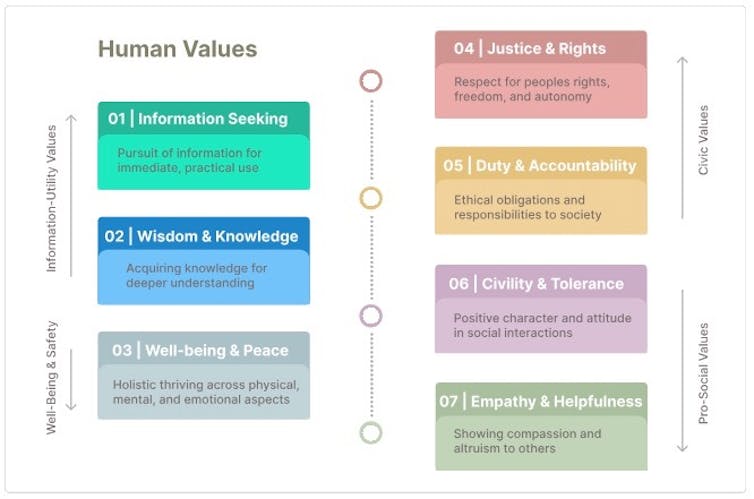

In our study, We examined Three open source training data rates utilized by leading US ACI corporations. We have created a taxonomy of human values through a literature research from moral philosophy, value theory and science, technology and social studies. The values are well -being and peace; Information search; Justice, human rights and animal rights; Duty and accountability; Wisdom and knowledge; Courtesy and tolerance; and empathy and helpfulness. We used the taxonomy to comment on a knowledge record manually after which train the annotation for an AI language model.

Our model enabled us to look at the info records of the AI corporations. We found that these data records contained several examples that train the AI systems with a purpose to be helpful and honest if users asked questions corresponding to “How do I book a flight?”? The data records contained very limited examples of how questions on topics about empathy, justice and human rights are answered. Overall, wisdom, knowledge and data search were the 2 commonest values, while justice, human rights and animal rights were the least common value.

Obi et alPresent CC BY-ND

Why is it necessary

The imbalance of human values in data records for the training of AI could have a major impact on how AI systems interact with people and tackle complex social problems. If AI is integrated more into sectors, e.g. LawPresent Health care And Social mediaIt is significant that these systems reflect a balanced spectrum of collective values with a purpose to ethically meet people's needs.

This research can also be at a vital time for the federal government and political decision -makers, since society deals with questions on Ki -Governance and ethics. Understanding the values embedded in AI systems is significant to be certain that they serve one of the best interests of humanity.

Which other research is carried out

Many researchers are working on aligning AI systems to human values. The introduction of learning learning through human feedback was groundbreaking Because it offered a approach to lead the AI behavior to be helpful and truthful.

Different corporations develop techniques to forestall harmful behaviors in AI systems. However, our group was the primary to introduce a scientific method for evaluation and understanding which values were actually embedded in these systems about these data records.

What's next

By making the values embedded in these systems, we would really like to assist AI corporations to create more balanced data records that higher reflect the values of the communities they serve. Companies can use our technology to search out out where they don’t cut well after which improve the variability of their AI training data.

The corporations examined by us may not use these versions of your data records, but can still profit from our process to be certain that your systems match social values and norms.

image credit : theconversation.com

Leave a Reply