When someone sees something that just isn’t there, people often confer with experience as hallucination. Hallucinations occur when your sensory perception doesn’t correspond external stimuli.

Technologies that depend on artificial intelligence may have hallucinations.

If an algorithmic system generates information that seems plausible but is definitely inaccurate or misleading, computer scientists call it a KI hallucination. Researchers have found these behaviors in various kinds of AI systems, from chatbots equivalent to Chatt To Image generators How Dall-E too Autonomous vehicles. We are Information science Researcher who studied hallucinations in AI language recognition systems.

Wherever AI systems are utilized in on a regular basis life, your hallucinations will be risks. Some could also be barely a chat bot to a straightforward query The improper answer, the user will be poorly informed. In other cases, nevertheless, the missions are much higher. From court halls wherein AI software is used Make conviction decisions To medical insurance firms that use algorithms to Determine the authorization of a patient AI Hallucinations can have life-changing consequences for reporting. You may even be life -threatening: use autonomous vehicles AI for recognizing obstaclesOther vehicles and pedestrians.

Invent

Hallucinations and their effects rely upon the style of AI system. In large voice models – the underlying technology of AI chatbots – hallucinations are information that sounds convincing but false, invented or irrelevant. A AI chat bot can create a reference to a scientific article that doesn’t exist or delivers a historical incontrovertible fact that is just improper Let it sound credible.

In a 2023 Legal proceedingsFor example, a New York lawyer submitted a legal mandate that he had written with the assistance of Chatgpt. A demanding judge later noticed that the letter quoted a case that Chatgpt had noted. This could lead on to different leads to court halls if people couldn’t recognize the hallucined information.

With AI tools that may recognize objects in images, hallucinations occur when the AI creates switching titles that usually are not loyal to the image provided. Imagine While you might be sitting on a bench. This inaccurate information could lead on to different consequences in contexts wherein accuracy is critical.

What causes hallucinations

Engineers construct AI systems by collecting massive data quantities and inserting them right into a computer system that recognizes the pattern in the info. The system develops methods for answering questions or executing tasks based on these patterns.

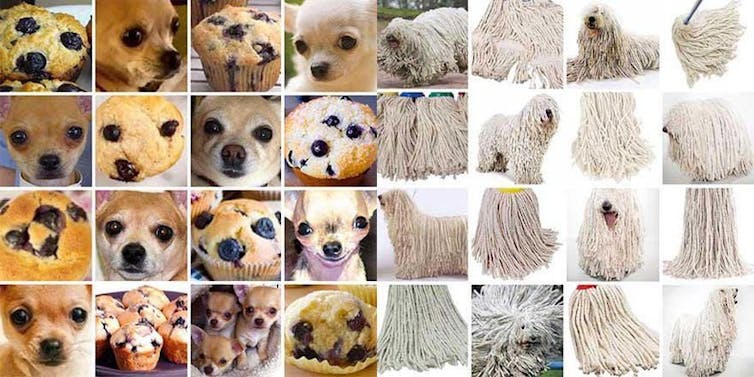

Provide a AI system with 1,000 photos of varied dogs, that are labeled accordingly, and the system will soon learn to acknowledge the difference between a poodle and a golden retriever. But feed it a photograph of a blueberry muffin and the way Machine learning researchers Have shown that the muffin is a chihuahua.

Shenkman et alPresent Cc from

If a system doesn’t understand the query or the knowledge with which it’s presented, it could possibly hallucinate it. Hallucinations often occur when the model fills out gaps from similar contexts from its training data or whether it is created using biased or incomplete training data. This results in false assumptions, as within the case of the mischievous blueberry muffin.

It is vital to differentiate between AI hallucinations and deliberately creative AI results. If a AI system is asked to be creative -as when writing a story or creating artistic pictures -his recent outputs are expected and desired. On the opposite hand, hallucinations occur when a AI system is asked to offer factual information or perform certain tasks, but as an alternative generates incorrect or misleading content while they represent it as precisely.

The essential difference is in context and purpose: Creativity is suitable for artistic tasks, while hallucinations are problematic if obligatory if accuracy and reliability are required.

To address these problems, firms have proposed Guidelines. Nevertheless, these problems can exist in popular AI tools.

https://www.youtube.com/watch?v=CFQTFVWOFG0

What is endangered

The effects of an edition equivalent to calling a blueberry muffin a chihuahua must appear trivial, but take into consideration fatal traffic accident. An autonomous military drone that incorrectly identified a goal could endanger the lifetime of the civilian population.

For AI tools that provide automatic speech recognition, hallucinations are KI transcriptions that contain words or phrases that contain the products Never really spoken. This is more prone to occur in loud environments wherein a KI system may add recent or irrelevant words to decipher back background noises equivalent to a passing truck or a crying child.

Since these systems are integrated into health care, social service and the legal environments more often, hallucinations can result in imprecisely clinical or legally Results that damage Patients, criminal accused or families who need social support.

Check the work of AI

Regardless of the efforts of AI firms to mitigate hallucinations, users should remain vigilant and query AI results, especially in the event that they are utilized in contexts that require precision and accuracy. The double review of knowledge with trustworthy sources, if obligatory and recognized the restrictions of those tools, are essential steps to reduce your risks.

image credit : theconversation.com

Leave a Reply